1 The Nature and Types of Science

At the end of this chapter, you will be able to:

- explain the role that control groups play in studying the natural world

- describe the nature of science

- compare and contrast hypotheses and predictions

- compare and contrast basic science and applied science

- describe questions from an ultimate perspective as well as a proximate perspective

Introduction

Science is a very specific way of learning, or knowing, about the world. Humans have used the process of science to learn a huge amount about the way the natural world works. Science is responsible for amazing innovations in medicine, hygiene, and technology. There are, however, areas of knowledge and human experience that the methods of science cannot be applied to. These include such things as answering purely moral questions, aesthetic questions, or what can be generally categorized as spiritual questions. Science cannot investigate these areas because they are outside the realm of material phenomena, the phenomena of matter and energy, and cannot be observed and measured. Science is interested in answering questions about the physical world. Science has to be able to observe and measure things in the natural world.

Here are some examples of questions that can be answered using science:

- What is the optimum humidity for the growth and proliferation of the giant puffball fungus (Calvatia gigantea)? If you want to learn more about this cool fungus, visit the following link.

- Are female birds attracted to male birds that have specific colors or traits?

- What virus causes a certain disease in a population of sheep?

- What dose of the antibiotic amoxicillin is optimal for treating pneumonia in an 80-year-old?

On the other hand, here are some examples of questions that cannot be answered using science:

- Where do ghosts live?

- How ethical is it to genetically engineer human embryos? To learn more about designer babies, visit the following link.

- What is the effect of fairies on Texan woodland ecosystems?

A scientific question is one that can be answered by using the process of science on the natural world (testing hypotheses, making observations about the natural world, designing experiments).

Sometimes you will directly make observations yourself about the natural world that lead you to ask scientific questions, other times you might hear or read something that leads you to ask a question. Regardless of how you make your initial observation, you will want to research your topic before setting up an experiment. When you’re learning about a topic, it’s important to use credible sources of information.

Observations vs. Inferences

The scientific process typically starts with an observation (often a problem to be solved) that leads to a question. Remember that science is very good at answering questions having to do with observations about the natural world but is very bad at answering questions having to do with morals, ethics, or personal opinions. If you read a question that had to do with an opinion or an ethically-complex matter, it is likely not answerable using science; however, a question that involves observation and data collection, as well as the use of quantitative measures, is likely answerable using science.

Let’s think about a simple problem that starts with an observation and apply the scientific method to solve the problem. One Monday morning, a student arrives at class and quickly discovers that the classroom is too warm. That is an observation that also describes a problem: the classroom is too warm. The student then asks a question: “Why is the classroom so warm?”

Now, let’s get back to contrasting observations and inferences. Students will frequently get confused between these two. An observation is obtained usually from a primary source – this is a source that directly witnessed or experienced a certain event. In other words, an observation is easily seen. For instance, if you are a polar bear researcher who is observing the behavior and dietary tendencies of a polar bear from an observatory in Greenland, you are likely to notice that a polar bear consumes meat exclusively. Then, you may infer that the polar bear has a jaw morphology optimized for chewing on meat and a digestive tract optimized for digesting it. However, you cannot scrutinize the jaw morphology or the digestive tract well enough (unlike the polar bear’s dietary tendency, which is more evident to you), so this is still an inference rather than an observation. An inference is a conclusion that is drawn based on logical reasoning as well as evidence that is observed. Thus, observations are required to make an inference but they are still distinct.

Methods of Scientific Investigation and Scientific Inquiry

One thing is common to all forms of science: an ultimate goal “to know.” Curiosity and inquiry are the driving forces for the development of science. Scientists seek to understand the world and the way it operates. Two methods of logical thinking are used: inductive reasoning and deductive reasoning (Fig 1).

Inductive reasoning is a form of logical thinking that uses related observations to arrive at a general conclusion. This type of reasoning is common in descriptive science. A life scientist such as a biologist makes observations and records them. These data can be qualitative (descriptive) or quantitative (consisting of numbers), and the raw data can be supplemented with drawings, pictures, photos, or videos. From many observations, the scientist can infer conclusions (inductions) based on evidence. Inductive reasoning involves formulating generalizations inferred from careful observation and the analysis of a large amount of data.

Deductive reasoning or deduction is the type of logic used in hypothesis-based science. Recall what a hypothesis is. In deductive reasoning, the pattern of thinking moves in the opposite direction as compared to inductive reasoning. Deductive reasoning is a form of logical thinking that uses a general principle or law to forecast specific results. From those general principles, a scientist can extrapolate and predict the specific results that would be valid as long as the general principles are valid. For example, a prediction would be that if the climate is becoming warmer in a region, the distribution of plants and animals should change. Comparisons have been made between distributions in the past and the present, and the many changes that have been found are consistent with a warming climate. Finding the change in distribution is evidence that the climate change conclusion is a valid one.

Figure 1: Scientists use two types of reasoning, inductive and deductive reasoning, to advance scientific knowledge. The conclusion from inductive reasoning can often become the premise for deductive reasoning.

Deductive and inductive reasoning are related to the two main pathways of scientific study, that is, descriptive science and hypothesis-based science. Descriptive (or discovery) science aims to observe, explore, and discover, while hypothesis-based science begins with a specific question or problem and a potential answer or solution that can be tested. The boundary between these two forms of study is often blurred because most scientific endeavors combine both approaches. Observations lead to questions, questions lead to forming a hypothesis as a possible answer to those questions, and then the hypothesis is tested. Thus, descriptive science and hypothesis-based science are in continuous dialogue.

Testing Hypotheses

Biologists study the living world by posing questions about it and seeking science-based responses. This approach is often referred to as the scientific method. The scientific method was used even in ancient times, but it was first documented by England’s Sir Francis Bacon (1561–1626), who set up inductive methods for scientific inquiry. The scientific method is not exclusively used by biologists but can be applied to almost anything as a logical problem-solving method.

The scientific process typically starts with an observation (often a problem to be solved) that leads to a question. Let’s think about a simple problem that starts with an observation and apply the scientific method to solve the problem. One Monday morning, a student arrives at class and quickly discovers that the classroom is too warm. That is an observation that also describes a problem: the classroom is too warm. The student then asks a question: “Why is the classroom so warm?”

A hypothesis is a suggested general explanation that can be tested. Once determined, an experiment or study will be designed, which will lead to a specific prediction being made given that experimental design. For example to address a problem, the hypotheses “Classroom density affect temperature.”

Once a hypothesis has been selected, you can design an experiment and from this determine a prediction. A prediction is specific explanation and based on the experiment you will design. An hypothesis is a general statement regarding explanations, but a prediction is specific to what you will do in the experiment. For example, you might hypothesize that density affects the classroom, and then you would predict that adding 20 more students in the classroom will increase the temperature of the classroom by 2 degrees. Notice how specific this is – you have a certain number of students and a certain number of degrees.

A hypothesis must be testable to ensure that it is valid. For example, a hypothesis that depends on what a bear thinks is not testable, because it can never be known what a bear thinks. It should also be falsifiable, meaning that it can be disproven by experimental results. An example of an unfalsifiable hypothesis is “Botticelli’s Birth of Venus is beautiful.” There is no experiment that might show this statement to be false. To test a hypothesis, a researcher will conduct one or more experiments designed to eliminate one or more of the hypotheses. This is important.

A hypothesis can be disproven, or eliminated, but it can never be proven. Science does not deal in proofs like mathematics. And technically to prove anything in biology, you would have to be able to sample every cell or organism alive today (but instead scientists rely on a sample and use statistics to analyze the data set and then make conclusions). If an experiment fails to disprove a hypothesis, then we find support for that explanation; however, over time and with new ideas and technology, a better explanation may be found, or a more carefully designed experiment may be found to falsify the hypothesis.

Each experiment will have one or more variables and one or more controls. A variable is any part of the experiment that can vary or change during the experiment. A control or a control group is a part of the experiment in which the researcher “knows” what to expect – they “know” the results. A control group can be manipulated (e.g. researcher adds water to a pot of plants) and a control group can change (e.g. the plants grow in height). Some people will say a control has characteristics that are “not manipulated” and “don’t change”, but as explained above, these are not exactly right for describing control groups. Again, a control group is a group in your experiment where you know the expected outcome and therefore can use this group as a comparison against the treatment groups, where you don’t know the outcome (although you have made a prediction). For example, the scientist “knows” a pot of plants with water added will grow, and they have predicted that added 20 ml of toxin to the pot will stunt the plant’s growth.

Another aspect important in experimental design minimizing confounding variables. It is important to test only one variable at a time so you will be able to tell if that variable is making a difference with your experiment. For example, if you had different fertilizers and also different amounts of water, you wouldn’t know for sure if the water or the fertilizer was causing the plants to differ in growth rate. As such, we can define a confounding variable as not the main variable of interest (independent variable) but one that has an unintended effect on the dependent variable, making it difficult to determine the true cause-and-effect relationship between the independent variable and the dependent variable. Because confounding variables are variables that researchers did not intend to study but end up affecting the dependent variable, that can lead to inaccurate or misleading conclusions.

Specific Types of Control Groups

As stated above, a control group allows you to make a comparison that is important for interpreting your results. Control groups are samples that help you to determine that differences between your experimental or treatment groups and the control groups are due to your treatment rather than a different variable – they eliminate alternate explanations for your results (including experimental error and experimenter bias). They increase reliability, often through the comparison of control measurements and measurements of the experimental groups. If the results of the experimental group differ from the control group, the difference must be due to the hypothesized treatment manipulation, rather than some outside factor. It is common in complex experiments (such as those published in scientific journals) to have more control groups than experimental groups.

Example:

Question: Which fertilizer will produce the greatest number of tomatoes when applied to the plants?

Hypothesis: Fertilizers will affect tomato growth.

Prediction: If I apply different brands of fertilizer to tomato plants, the most tomatoes will be produced from plants watered with Brand A because Brand A advertises that it produces twice as many tomatoes as other leading brands.

Experiment: Purchase 10 tomato plants of the same type from the same nursery. Pick plants that are similar in size and age. Divide the plants into two groups of 5. Apply Brand A to the first group and Brand B to the second group according to the instructions on the packages. After 10 weeks, count the number of tomatoes on each plant.

Independent Variable: Brand of fertilizer.

Dependent Variable: Number of tomatoes.

The number of tomatoes produced depends on the brand of fertilizer applied to the plants.

Constants: amount of water, type of soil, size of pot, amount of light, type of tomato plant, length of time plants were grown.

Confounding variables: any of the above that are not held constant, plant health, diseases present in the soil or plant before it was purchased.

Results: Tomatoes fertilized with Brand A produced an average of 20 tomatoes per plant, while tomatoes fertilized with Brand B produced an average of 10 tomatoes per plant.

You’d want to use Brand A next time you grow tomatoes, right? But what if I told you that plants grown without fertilizer produced an average of 30 tomatoes per plant! Now what will you use on your tomatoes?

Results including control group: Tomatoes that received no fertilizer produced more tomatoes than either brand of fertilizer.

Conclusion: Although Brand A fertilizer produced more tomatoes than Brand B, neither fertilizer should be used because plants grown without fertilizer produced the most tomatoes.

Positive control groups are often used to show that the experiment is valid and that everything has worked correctly. You can think of a positive control group as being a group where you should be able to observe the thing that you are measuring (“the thing” should happen). The conditions in a positive control group should guarantee a positive result. If the positive control group doesn’t work, there may be something wrong with the experimental procedure.

Negative control groups are used to show whether a treatment had any effect. If your treated sample is the same as your negative control group, your treatment had no effect. You can also think of a negative control group as being a group where you should NOT be able to observe the thing that you are measuring (“the thing” shouldn’t happen), or where you should not observe any change in the thing that you are measuring (there is no difference between the treated and control group). The conditions in a negative control group should guarantee a negative result. A placebo group is an example of a negative control group.

As a general rule, you need a positive control to validate a negative result, and a negative control to validate a positive result. Let’s take a look at these through some illustrations.

- You read an article in the NY Times that says some spinach is contaminated with Salmonella. You want to test the spinach you have at home in your fridge, so you wet a sterile swab and wipe it on the spinach, then wipe the swab on a nutrient plate (petri plate).

- You observe growth. Does this mean that your spinach is really contaminated? Consider an alternate explanation for growth: the swab, the water, or the plate is contaminated with bacteria. You could use a negative control to determine which explanation is true. If a swab is wet and wiped on a nutrient plate, do bacteria grow?

- You don’t observe growth. Does this mean that your spinach is really safe? Consider an alternate explanation for no growth: Salmonella isn’t able to grow on the type of nutrient you used in your plates. You could use a positive control to determine which explanation is true. If you wipe a known sample of Salmonella bacteria on the plate, do bacteria grow?

- In a drug trial, one group of subjects is given a new drug, while a second group is given a placebo drug (a sugar pill; something that appears like the drug but doesn’t contain the active ingredient). Reduction in disease symptoms is measured. The second group receiving the placebo is a negative control group. You might expect a reduction in disease symptoms purely because the person knows they are taking a drug so they should be getting better. If the group treated with the real drug does not show more of a reduction in disease symptoms than the placebo group, the drug doesn’t really work. The placebo group sets a baseline against which the experimental group (treated with the drug) can be compared. A positive control group is not required for this experiment.

- A baseline refers to a point of reference or a starting point against which changes or measurements are compared. Baselines are essential for several reasons such as comparative analysis, quantification of change, and validity.

- In an experiment measuring the preference of birds for various types of food, a negative control group would be a “placebo feeder”. This would be the same type of feeder, but with no food in it. Birds might visit a feeder just because they are interested in it; an empty feeder would give a baseline level for bird visits. A positive control group might be a food that squirrels are known to like. This would be useful because if no squirrels visited any of the feeders, you couldn’t tell if this was because there were no squirrels around or because they didn’t like any of your food offerings!

- To test the effect of pH on the function of an enzyme, you would want a positive control group where you knew the enzyme would function (pH not changed) and a negative control group where you knew the enzyme would not function (no enzyme added). You need the positive control group so you know your enzyme is working: if you didn’t see a reaction in any of the tubes with the pH adjusted, you wouldn’t know if it was because the enzyme wasn’t working at all or because the enzyme just didn’t work at any of your tested pH. You need the negative control group so you can ensure that there is no reaction taking place in the absence of the enzyme; if the reaction proceeds without the enzyme, your results are meaningless.

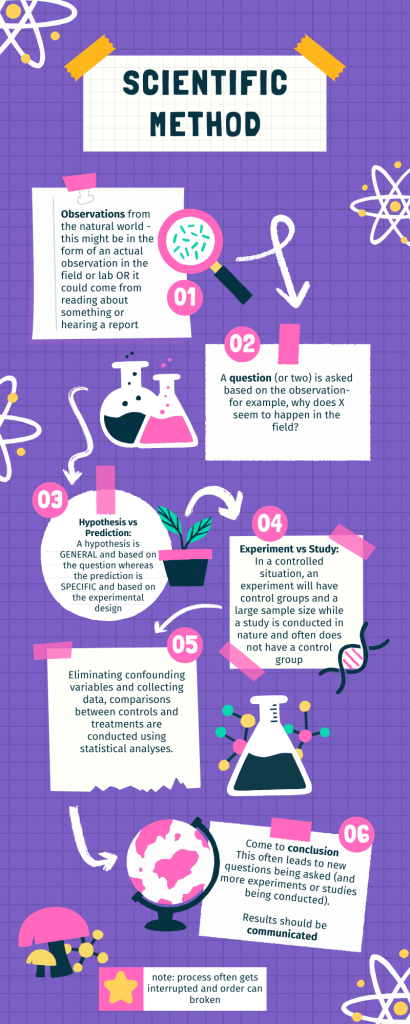

Scientists often perform the scientific method in a more circular and jagged fashion than we are initially taught (Fig 2). For example, a scientist might start with some results and this leads to a question. Then after setting up an experiment, an observation is made, which might lead to another question, and so on. Sometimes an experiment leads to conclusions that favor a change in approach; often, an experiment brings entirely new scientific questions to the puzzle.

Figure 2: The scientific method is a series of steps, ranging from an observation and typically concluding in the reporting of results; however, scientists often jump back and forth as needed and some steps can be skipped entirely. If the acquired data does NOT support a given hypothesis, alternative hypotheses should be considered and tested again. Thus, this is often an iterative process.

To determine if the results of their experiment are significant, researchers use a variety of statistical analyses. Statistical analyses help researchers determine whether the observations from their experiments are meaningful or due to random chance. For example, if a researcher observes a difference between the control group and experimental group, should they treat it as a real effect of the independent variable or simply random chance? A result is considered to have statistical significance when it is very unlikely to have occurred given the null hypothesis which states that there is no difference between two groups. Statistical results themselves are not entirely objective and can depend on many assumptions including the null hypothesis itself. A researcher must consider potential biases in their analyses, just as they do confounding variables in their experimental design. Two factors that play a major role in the power of an experiment to detect meaningful statistical differences are sample size and replication. Sample size refers to the number of observations within each treatment, while replication refers to the number of repeated times the same experiment treatment is tried. In general, the bigger the sample size and the more replication, the more confidence a researcher can have in the outcome of their study.

Watch this review video on the nature of science.

Types of Science

The scientific community has been debating for the last few decades about the value of different types of science. Is it valuable to pursue science for the sake of simply gaining knowledge, or does scientific knowledge only have worth if we can apply it to solving a specific problem or to better our lives? This question focuses on the differences between two types of science: basic science and applied science.

Basic science or “pure” science seeks to expand knowledge regardless of the short-term application of that knowledge. It is not focused on developing a product or a service of immediate public or commercial value. The immediate goal of basic science is knowledge for knowledge’s sake, although this does not mean that, in the end, it may not result in a practical application. For example, trying to understand the structure of DNA would fall under this basic science category.

In contrast, applied science aims to use science to solve real-world problems, making it possible, for example, to improve a crop yield or find a cure for a particular disease. In applied science, you might use information from basic science (e.g. the structure of DNA) in a direct way (e.g. how to determine who committed a crime through DNA fingerprinting).

Some individuals may perceive applied science as “useful” and basic science as “useless”; however, a careful look at the history of science reveals that basic knowledge has resulted in many remarkable applications of great value. Many scientists think that a basic understanding of science is necessary before researchers develop an application therefore, applied science relies on the results that researchers generate through basic science. Other scientists think that it is time to move on from basic science in order to find solutions to actual problems. Both approaches are valid. There are indeed problems that demand immediate attention; however, scientists would find few solutions without the help of the wide knowledge foundation that basic science generates.

An example of the link between basic and applied research is the Human Genome Project, a study in which researchers analyzed and mapped each human chromosome to determine the precise sequence of DNA subunits and each gene’s exact location. (The gene is the basic unit of heredity. An individual’s complete collection of genes is his or her genome.) Researchers have studied other less complex organisms as part of this project to gain a better understanding of human chromosomes. The Human Genome Project relied on basic research with simple organisms and, later, with the human genome. An important end goal eventually became using the data for applied research, seeking cures and early diagnoses for genetically related diseases.

While scientists usually carefully plan research efforts in both basic science and applied science, note that some discoveries are made by serendipity, that is, using a fortunate accident or a lucky surprise. Scottish biologist Alexander Fleming discovered penicillin when he accidentally left a petri dish of Staphylococcus bacteria open. An unwanted mold grew on the dish, killing the bacteria. Fleming’s curiosity to investigate the reason behind the bacterial death, followed by his experiments, led to the discovery of the antibiotic penicillin, which is produced by the fungus Penicillium. Even in the highly organized world of science, luck—when combined with an observant, curious mind—can lead to unexpected breakthroughs.

Scientists will frame their questions and experiments around two types of general categories: ultimate and proximate. Ultimate questions or explanations are considering a longer evolutionary perspective to address an observation. They might address why over evolutionary time a certain trait has evolved, and what has contributed to the advantage or survival of that trait/organism. A proximate question or explanation to an observation is looking at the more immediate or proximate mechanism to explain. Often here we find the specific mechanisms involved in driving the observation. We can state that ultimate questions address the “why” questions and proximate questions try to address the “how and the what” questions.

As an example, consider the observation that ice cream tastes sweet. The proximate explanation would focus on the mechanics of this – our taste buds detect sugars to guide appetite and trigger physiological processes for absorbing nutrients and adjusting metabolism. The ultimate explanation would focus on how detecting these carbohydrates is important for gaining energy for survival. Another ultimate explanation could be that by detecting palatable and sweet nutrients, we avoid chemical threats from the environment.

This is a short video that is also helpful in explaining the difference between ultimate and proximate explanations.

The Importance of Peer-Review in Science

Whether scientific research is basic science or applied science, scientists must share their findings for other researchers to expand and build upon their discoveries. Collaboration with other scientists—when planning, conducting, and analyzing results—is important for scientific research. For this reason, important aspects of a scientist’s work are communicating with peers and disseminating results to peers. Scientists can share results by presenting them at a scientific meeting or conference, but this approach can reach only the select few who are present. Instead, most scientists present their results in peer-reviewed manuscripts that are published in scientific journals. Peer-reviewed manuscripts are scientific papers that a scientist’s colleagues or peers review. Scholarly work is checked by a group of experts in the same field to make sure it meets the journal standards before it is accepted or published. These colleagues are qualified individuals, often experts in the same research area, who judge whether or not the scientist’s work is suitable for publication. The process of peer review helps to ensure that the research in a scientific paper or grant proposal is original, significant, logical, and thorough.

Grant proposals, which are requests for research funding, are also subject to peer review. Scientists publish their work so other scientists can reproduce their experiments under similar or different conditions to expand on the findings.

You’ve probably done a writing assignment or other project during which you have participated in a peer review process. During this process, your project was critiqued and evaluated by people of similar competence to yourself (your peers). This gave you feedback on how to improve your work. Scientific articles typically go through a peer review process before they are published in an academic journal, including conference journals. In this case, the peers who are reviewing the article are other experts in the specific field about which the paper is written. This allows other scientists to critique experimental design, data, and conclusions before that information is published in an academic journal. Often, the scientists who did the experiment and who are trying to publish it are required to do additional work or edit their paper before it is published. The goal of the scientific peer review process is to ensure that published primary articles contain the best possible science.

There are many journals and the popular press that do not use a peer-review system. A large number of online open-access journals, journals with articles available without cost, are now available many of which use rigorous peer-review systems, but some of which do not. Results of any studies published in these forums without peer review are not reliable and should not form the basis for other scientific work. In one exception, journals may allow a researcher to cite a personal communication from another researcher about unpublished results with the cited author’s permission.

The peer-review process for oral communications and poster presentations at scientific conferences is a little less grueling than for journals, although, a peer-review process is still applied before the work is accepted by conference organizers. Although many scientists will grimace at the mention of ‘peer review’, it is through this process that we increase the likelihood that valid science (and not pseudoscience) is shared with the world. Peer review is an essential part of the scientific process, to make important economic and health-related decisions that affect the future prosperity of humanity.

As with all forms of communication, scientific research articles, oral communications, and poster presentations need to be prepared and delivered according to specific guidelines and using a particular language. Student scientists must begin to understand these guidelines and are given opportunities to practice these forms of communication.

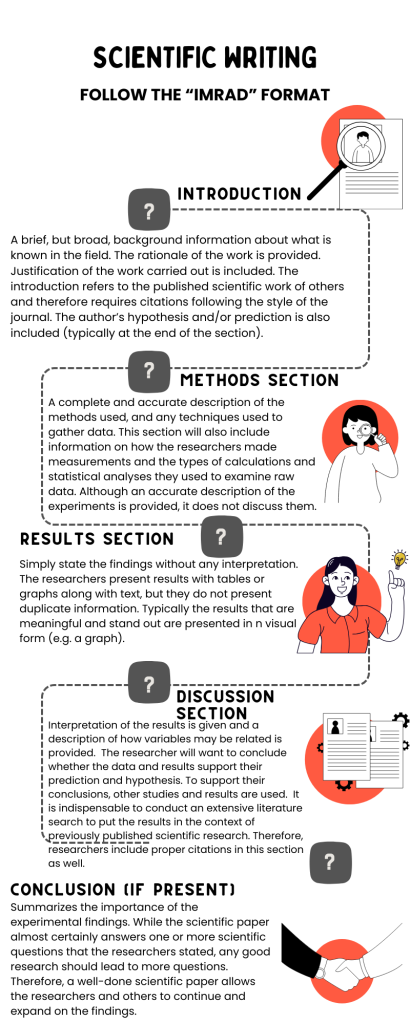

A scientific paper is very different from creative writing. Although creativity is required to design experiments, there are fixed guidelines when it comes to presenting scientific results. First, scientific writing must be brief, concise, and accurate. A scientific paper needs to be succinct but detailed enough to allow peers to reproduce the experiments.

The scientific paper consists of several specific sections—introduction, materials and methods, results, and discussion. This structure is sometimes called the “IMRaD” format. There are usually acknowledgment and reference sections as well as an abstract (a concise summary) at the beginning of the paper. There might be additional sections depending on the type of paper and the journal where it will be published. For example, some review papers require an outline.

Pseudoscience and Other Misuses of Science

Pseudoscience is a claim, belief, or practice that is presented as scientific but does not adhere to the standards and methods of science. True science is based on repeated evidence-gathering and testing of falsifiable hypotheses. Pseudoscience does not adhere to these criteria. In addition to phrenology, some other examples of pseudoscience include astrology, extrasensory perception (ESP), reflexology, reincarnation, and Scientology,

Characteristics of Pseudoscience

Whether a field is actually science or just pseudoscience is not always clear. However, pseudoscience generally exhibits certain common characteristics. Indicators of pseudoscience include:

- The use of vague, exaggerated, or untestable claims: Many claims made by pseudoscience cannot be tested with evidence. As a result, they cannot be falsified, even if they are not true.

- An over-reliance on confirmation rather than refutation: Any incident that appears to justify a pseudoscience claim is treated as proof of the claim. Claims are assumed true until proven otherwise, and the burden of disproof is placed on skeptics of the claim.

- A lack of openness to testing by other experts: Practitioners of pseudoscience avoid subjecting their ideas to peer review. They may refuse to share their data and justify the need for secrecy with claims of proprietary or privacy.

- An absence of progress in advancing knowledge: In pseudoscience, ideas are not subjected to repeated testing followed by rejection or refinement, as hypotheses are in true science. Ideas in pseudoscience may remain unchanged for hundreds — or even thousands — of years. In fact, the older an idea is, the more it tends to be trusted in pseudoscience.

- Personalization of issues: Proponents of pseudoscience adopt beliefs that have little or no rational basis, so they may try to confirm their beliefs by treating critics as enemies. Instead of arguing to support their own beliefs, they attack the motives and character of their critics.

- The use of misleading language: Followers of pseudoscience may use scientific-sounding terms to make their ideas sound more convincing. For example, they may use the formal name dihydrogen monoxide to refer to plain old water.

Persistence of Pseudoscience

Despite failing to meet scientific standards, many pseudosciences survive. Some pseudosciences remain very popular with large numbers of believers. A good example is astrology and zodiac signs.

Astrology is the study of the movements and relative positions of celestial objects as a means for divining information about human affairs and terrestrial events. Many ancient cultures attached importance to astronomical events, and some developed elaborate systems for predicting terrestrial events from celestial observations. Throughout most of its history in the West, astrology was considered a scholarly tradition and was common in academic circles. With the advent of modern Western science, astrology was called into question. It was challenged on both theoretical and experimental grounds, and it was eventually shown to have no scientific validity or explanatory power.

Today, astrology is considered a pseudoscience, yet it continues to have many devotees. Most people know their astrological sign, and many people are familiar with the personality traits supposedly associated with their sign. Astrological readings and horoscopes are readily available online and in print media, and a lot of people read them, even if only occasionally. About a third of all adult Americans actually believe that astrology is scientific. Studies suggest that the persistent popularity of pseudosciences such as astrology reflects a high level of scientific illiteracy. It seems that many Americans do not have an accurate understanding of scientific principles and methodology. They are not convinced by scientific arguments against their beliefs.

Dangers of Pseudoscience

Belief in astrology is unlikely to cause a person harm, but belief in some other pseudosciences might — especially in health care-related areas. Treatments that seem scientific but are not may be ineffective, expensive, and even dangerous to patients. Seeking out pseudoscientific treatments may also delay or preclude patients from seeking scientifically-based medical treatments that have been tested and found safe and effective. In short, irrational health care may not be harmless.

Junk Science or “Bad Science”

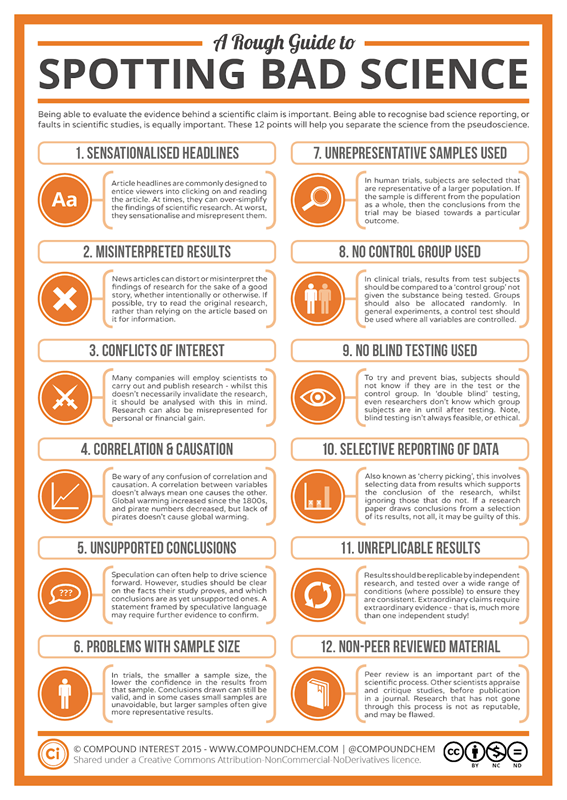

As discussed above, good science practices include following the scientific method, have a testable hypothesis, good experimental design, including large sample sizes and replication, results that are statistically analyzed, and interpretation and analysis subject to peer review (Fig 3). If a researcher misses these steps and/or tries to manipulate data, they are following junk science.

An example of this is found with the autism-vaccine debate. You may have heard that certain vaccines put the health of young children at risk. This persistent idea is not supported by scientific evidence or accepted by the vast majority of experts in the field. It stems largely from an elaborate medical research fraud that was reported in a 1998 article published in the respected British medical journal, The Lancet. The main author of the article was a British physician named Andrew Wakefield. In the article, Dr. Wakefield and his colleagues described case histories of 12 children, most of whom were reported to have developed autism soon after the administration of the MMR (measles, mumps, rubella) vaccine.

Several subsequent peer-reviewed studies failed to show any association between the MMR vaccine and autism. It also later emerged that Wakefield had received research funding from a group of people who were suing vaccine manufacturers. In 2004, ten of Wakefield’s 12 coauthors formally retracted the conclusions in their paper. In 2010, editors of The Lancet retracted the entire paper. That same year, Wakefield was charged with deliberate falsification of research and barred from practicing medicine in the United Kingdom. Unfortunately, by then, the damage had already been done. Parents afraid that their children would develop autism had refrained from having them vaccinated. British MMR vaccination rates fell from nearly 100 per cent to 80 per cent in the years following the study. The consensus of medical experts today is that Wakefield’s fraud put hundreds of thousands of children at risk because of the lower vaccination rates and also diverted research efforts and funding away from finding the true cause of autism.

Figure 3: A Rough Guide to Spotting Bad Science.

Correlation-Causation Fallacy

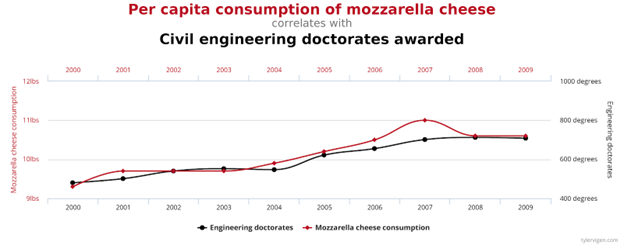

Many statistical tests used in scientific research calculate correlations between variables. Correlation refers to how closely related two data sets are, which may be a useful starting point for further investigation. It can be either a positive correlation, that is when one variable increases so does the other, or it can be a negative correlation. With a negative correlation, as one variable increases, the other variable decreases. Correlation, however, is also one of the most misused types of evidence, primarily because of the logical fallacy that correlation implies causation. In reality, just because two variables are correlated does not necessarily mean that either variable causes the other.

A few simple examples, illustrated by the graph below (Fig 4), can be used to demonstrate the correlation-causation fallacy. Assume a study found that both per capita consumption of mozzarella cheese and the number of Civil Engineering doctorates awarded are correlated; that is, rates of both events increase together. If correlation really did imply causation, then you could conclude from the second example that the increase in age of Miss America causes an increase in murders of a specific type or vice versa.

Figure 4: Spurious Correlations [Causation Fallacy] – Consumption of mozzarella cheese and awarded Doctorates

An actual example of the correlation-causation fallacy occurred during the latter half of the 20th century. Numerous studies showed that women taking hormone replacement therapy (HRT) to treat menopausal symptoms also had a lower-than-average incidence of coronary heart disease (CHD). This correlation was misinterpreted as evidence that HRT protects women against CHD. Subsequent studies that controlled other factors related to CHD disproved this presumed causal connection. The studies found that women taking HRT were more likely to come from higher socio-economic groups, with better-than-average diets and exercise regimens. Rather than HRT causing lower CHD incidence, these studies concluded that HRT and lower CHD were both effects of higher socio-economic status and related lifestyle factors.

Summary

The scientific method is a systematic and logical approach used by scientists to investigate and understand the natural world. It consists of a series of steps and principles designed to ensure the reliability and validity of scientific research. The importance of the scientific method in studying the natural world cannot be overstated, as it forms the foundation of all scientific inquiry and contributes to the advancement of knowledge in various fields. The scientific method emphasizes objectivity and impartiality in the pursuit of knowledge, reducing the influence of personal biases and opinions. As such predictions should be tested through experiments applying the scientific method and the results should be reproducible by other researchers, ensuring the reliability of results.

Key Steps of the Scientific Method:

- Observation: The process begins with the observation of a phenomenon or a problem in the natural world. This observation serves as the starting point for scientific investigation.

- Question: Based on the observation, scientists formulate a clear and specific research question that they aim to answer through their experiments or studies.

- Hypothesis: A hypothesis is a testable and falsifiable statement or educated guess that proposes a possible explanation for the observed phenomenon. It serves as the foundation for further research.

- Experimentation: Scientists design and conduct experiments to test their hypotheses. This involves manipulating variables, collecting data, and analyzing the results in a controlled and systematic manner.

- Data Analysis: The collected data is analyzed using statistical and analytical techniques to draw meaningful conclusions. The results are often presented graphically or in tables.

- Conclusion: Based on the data analysis, scientists draw conclusions about whether their hypothesis was supported or rejected. These conclusions contribute to our understanding of the natural world.

- Communication: Scientists communicate their findings through research papers, presentations, or peer-reviewed journals, allowing other researchers to evaluate and replicate their work.

When engaging in the scientific method, researchers can consider basic scientific research or applied scientific research. The goal of basic science is to expand our understanding of the natural world, uncovering fundamental principles and laws governing various phenomena. The primary goal is to enhance knowledge for its own sake rather than for immediate practical applications. In contrast, the goal of applied science is to use scientific knowledge to solve specific problems, improve processes, or develop practical applications. It focuses on the “how to” and “what for.”

When scientists are designing experiments and considering results, they need to ensure they are practicing “good science”. Biased or unreliable science can mislead the public and result in harm. In particular, pseudoscience and junk science should be highlighted as such and considered accordingly. Pseudoscience refers to practices, beliefs, or claims that may appear to be scientific but lack the essential elements of genuine scientific inquiry. Pseudoscientific claims often lack empirical evidence or rely on anecdotal evidence rather than rigorous experimentation and data analysis. Pseudoscientific ideas are often presented in a way that makes them difficult to test or falsify.

Junk science refers to research, studies, or claims that are presented as scientific but lack rigor, validity, or adherence to the scientific method. Key characteristics of junk science include poor methodology, such as flawed experimental designs, inadequate sample sizes, or biased data collection methods. Findings or conclusions from junk science may be exaggerated, misrepresented, or taken out of context to support a particular agenda.

In summary, when scientists study the natural world they aim to follow the scientific method, collecting empirical evidence, and reporting their results using the peer review process. Hypotheses can be supported or refuted through the use of the scientific method. When a hypothesis has stood the test of time, and has support from many disciplines, we consider this hypothesis a theory.

Questions

Glossary

References

Bartee, L., Shriner, W., and Creech C. (n.d.) Principles of Biology. Pressbooks. Retrieved from https://openoregon.pressbooks.pub/mhccmajorsbio/chapter/using-credible-sources/

Clark, M.A., Douglas, M., and Choi, J. 2018. Biology 2e. OpenStax. Retrieved from https://openstax.org/books/biology-2e/pages/1-introduction

Molnar, C., & Gair, J. 2015. Concepts of Biology – 1st Canadian Edition. BCcampus. Retrieved from https://opentextbc.ca/biology/

TED-Ed. 2017, July 6. How to spot a misleading graph – Lea Gaslowitz. YouTube. https://www.youtube.com/watch?v=E91bGT9BjYk&feature=youtu.be

Wakefield, A.J., Murch, S.H., Anthony, A., Linnell, J., Casson, D.M., Malik, M., et al. (1998). Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. Lancet, 351: 637–41.

Wikipedia contributors. (2020, June 18). Andrew Wakefield. Wikipedia. https://en.wikipedia.org/w/index.php?title=Andrew_Wakefield&oldid=963243135